Data. It is everything.

Git. Not perfect, but branching makes up for other shortcomings.

Branching Data. Ow, my brain hurts.

Backstory

Game companies have three types of asset creators: programmers, artists, and designers.

- Programmers make code, just like any other industry, and git branching works really well for that. Our changes rarely collide on the exact same lines, and if they do, a human needs to get involved in figuring out what was intended.

- Artists make binary assets like Photoshop files, 3D models, animations, etc. Git is poorly suited, even with LFS, for dealing with this, but we limp along ok because it's very rare to change these assets in branches or have any expectation of merging the results.

- Designers have it the worst, though. Their work is almost always textual, almost always needs to be modified by others in branches. It often resembles a DB, which is definitely complex to merge. Because tools are normally lacking and the tech skills of designers generally are too, decisions about software often are made without taking everything into account, causing problems as the team scales.

In multiple companies, I've worked with a design team that chose Google Sheets as a place to store their data. This was always suggested by a well-meaning engineer that wrote a trivial export routine and saw it was easy to do. The benefits of being able to edit any piece of data from anywhere in the world is so appealing! In three separate companies, I have watched without surprise as this became a massive bottleneck for the whole team. Why did it fail?

- Google Sheets has a rate limiter on export. If you ever have more than a few hundred lines of data, you rapidly hit this limit. The amount of time required to build a multithreaded exporter with tolerance for rate limiting is substantial. The amount of time it takes to export grows linearly with your dataset. At one company, it took 45 minutes to save out the data, this data that you created online to make your life easier.

- Google Sheets is not possible to check into Git, so it is not possible to safely branch this way. People have attempted hacking this with commit hooks and duplicating and renaming sheets, dynamically creating them based on the branch names, etc. It's a LOT OF WORK. And it actually doesn't know anything about merging either, so that's a one-way path to making more data, not maintaining a single source of truth. And honestly, that's the whole point of revision control.

At least at a couple of those companies, the engineering manager saw this to be true, when I pointed it out, and we pivoted. To what? Excel Files.

This was a different problematic choice, to be honest. They are binary files and do not merge well. There are ways to get online/shared editing to work. There are ways to merge .XLS files and perhaps .XLSX too. I honestly gave up after a few weeks of dealing with the conflicts. Either we always modified them in one branch and never anywhere else, or we needed to find a cleaner solution.

Enter CSV

The first and most obvious thing was to have two folders for data. The first was the source data and was for .XLSX files which might contain formulas. The second was the exported data which was game-ready, easy to read (CSV format is trivial and well-supported by libraries), and in the case of a conflict in the source data files, you could simply diff the CSV files and see what was changed, and figure out which side was easiest to do again.

Then it dawned on us, let's just ditch formulas in the source data and switch to editing CSV files. Definitely not as pretty, definitely not as slick, but damn, it works.

The Catch?

CSV files are columnar data. It's very common to modify every line in a file when adjusting a single column of data. It's very common to add or remove a single line in another branch. Boom! Git cannot resolve these kinds of conflicts, because you touched the same line in a file. What a drag.

After a little hunting, I discovered this amazing tool called Coopy which can do all sorts of things, but in particular can handle merges of tabular data such as CSV files! However, it's a little quirky.

Our team also uses a pretty nice GUI Git client called SmartGit, which even most non-technical people can manage to use. Unfortunately, it changes the names of files during the merge process in such a way that breaks Coopy. I can fix that.

The other thing is, I wanted to make this work without requiring special configuration or a specific client, because some of the programmers like using git CLI. I don't understand it, but I'm happy to support it. :-)

Lastly, we have a /Tools/bin folder in our git repo that everyone on our team is required to add to their %PATH% variable. I know it's a security risk in theory, but in practice it's been massively useful for rolling out new tools and processes without impacting people. We are a private company, with a private repo, and we can take that risk. In this folder, there's a setup.bat that configures stuff so that the project is ready to go, setting up symlinks or configuring hidden .git files, etc.

Steps to Setup CSV Merging (for Windows)

- Download Coopy and add it as a subfolder of the /Tools/bin, so it's not actually on the PATH.

- Have every member of the team (and your server) edit /.git/config and add this block:

[merge "merge-csv"]

name = CSV merge

driver = ssmerge.bat %O %B %A

recursive = merge-csv- Add this line to .gitattributes in the root of your repository:

*.csv merge=merge-csv- Create a batch file /Tools/bin/ssmerge.bat and paste this into it:

copy /Y %1 ssmerge_base.csv

copy /Y %2 ssmerge_left.csv

copy /Y %3 ssmerge_right.csv

%~dp0coopy\bin\ssmerge.exe ssmerge_base.csv ssmerge_left.csv ssmerge_right.csv --output ssmerge_right.csv --id=objID

copy /Y ssmerge_right.csv %3

erase /Q ssmerge_base.csv

erase /Q ssmerge_left.csv

erase /Q ssmerge_right.csvWhat and why these steps?

SmartGit writes out files like .merge_#### and Coopy prefers the extension to be .csv so this batch file simply copies the merge files to known-good filenames, performs the merge, then copies the output back to the appropriate filename.

The block inside .git\config simply tells git to call the batch file when a conflict happens. Accordingly, it works for any git client because it's triggered by git as needed.

Does it work well?

Yes, pretty well. I made four different branches and modified the same .csv file in various ways, rebasing across the branches, and in every case the data was applied in the order expected. I did notice some odd outputs once in a while before I added the --id=<primarykey> flag, which I think helps Coopy handle the contents better. If you have different kinds of data with different primary keys, you could set up multiple merge tool declarations, I suppose. Our data is all normalized so it works out.

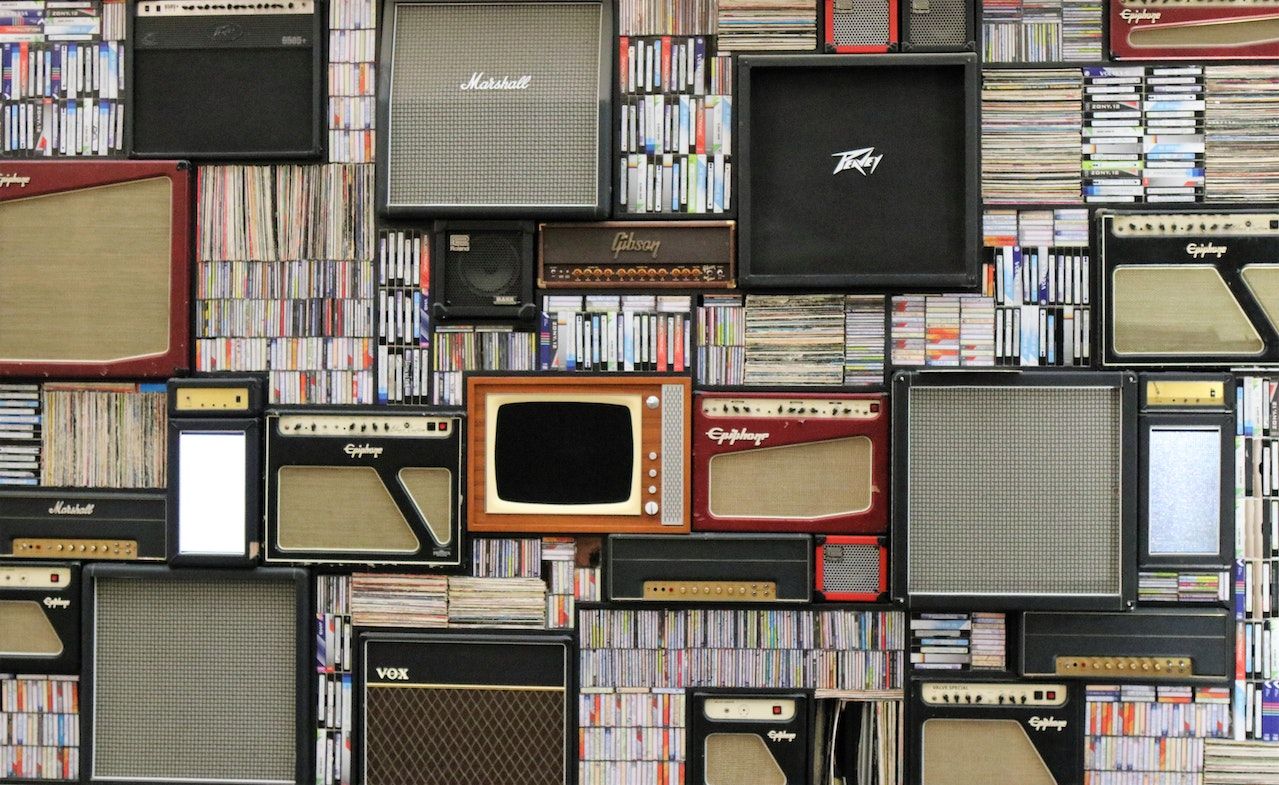

Thanks to Expect Best for that awesome cover photo!