As a programmer, it's common to write the same thing during your career over and over again. Sometimes a little different this time, a little better the next time, but often it's literally the exact same output from the exact same inputs that you need, but in a different language, because tools that work get used repeatedly, and sometimes you reach into your drawer full of tools and realize you don't have the one you're expecting to find at your fingertips. Today, it was CRC32.

I was hurrying to build a hashed number sequence with low collisions for a large set of inputs. I dug around and found MurMurHash32 came recommended. (It turns out this is an algorithm invented by Austin Appleby, who I worked with many years ago at Retro Studios.) In my test harness, I was getting about 5 million collisions from 150 million inputs.

This was ok, but I really wanted to see if I could do better with my handy dandy CRC32. And it wasn't there. Why on earth such a useful algorithm would not be provided in Microsoft's massive .NET libraries by now just surprises me. Naturally, there are dozens of implementations online and most are over-written or make too many assumptions. So there I go, rewriting a CRC32 routine again, based off someone else's code again, then testing it against some standard inputs again, ad nauseum. Of course, I have this and hundreds of other useful routines sitting somewhere on my hard drive... somewhere safe, where in case I ever needed it again in C++, I could just grab it and use it. The internet is somehow easier than locating my own code, sadly. But I need it in C#, and I needed it now. Well, here it is, for all time, the simplest C# CRC32 you can get on the internet in case you are ever looking for it:

// Table driven CRC32. Matches the output of CRC32/JAMCRC https://crccalc.com/

private static UInt32[] _checksumTable = null;

public static UInt32 Crc32(string s)

{

const UInt32 _generator = 0xEDB88320;

if (_checksumTable==null)

{

_checksumTable = new UInt32[256];

for (uint i=0; i<256; i++)

{

uint v = i;

v = ((v & 1) * _generator) ^ (v >> 1);

v = ((v & 1) * _generator) ^ (v >> 1);

v = ((v & 1) * _generator) ^ (v >> 1);

v = ((v & 1) * _generator) ^ (v >> 1);

v = ((v & 1) * _generator) ^ (v >> 1);

v = ((v & 1) * _generator) ^ (v >> 1);

v = ((v & 1) * _generator) ^ (v >> 1);

v = ((v & 1) * _generator) ^ (v >> 1);

_checksumTable[i] = v;

}

}

// Run through every character in the string and compute the CRC32

uint result = 0xFFFFFFFF;

foreach (char c in s)

{

result = (result >> 8) ^ _checksumTable[(byte)((byte)c ^ result)];

}

return result;

}For the record, CRC32 is slower than MurMurHash32, primarily because it operates one byte at a time (in this implementation), but scrambles bits much better. It yielded me 2.5 million collisions for the same 150 million inputs. Of course, I attempted combining the two in various ways, but it gave me no better results.

Time to step back

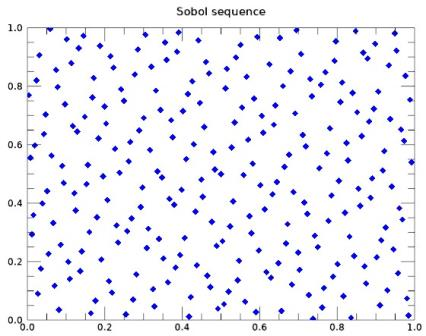

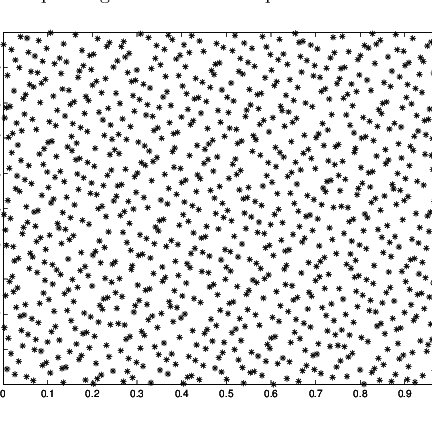

In the distant past, I remember learning about something called a low discrepancy sequence, and the related concept of quasi-random sequences. Obscure math comes in handy sometimes. When taking in orderly inputs and trying to generate disorder, the simplest (and wrong) approach is to destroy the information content by mixing the bits together willy-nilly. Rather, consult the dusty corners of mathematics and if you can locate the right keyword, you may be rewarded with a solution. However, because this is actually a very dusty corner, and I have an allergy to using 3rd party libraries without source code or commercial-friendly licenses, it became quickly obvious there aren't enough users out there to just hunt for Halton or Sobel sequence generator code in C#. I did find it in Fortran of all things. Not helpful.

So, what did I do? I gave up and wrote my own damn code. Of all the things, I knew exactly what the requirements were. I already had orderly inputs and wanted them to appear random but have no collisions. The most obvious thing to do was to rearrange the bits into different places, then XOR a mask against the result so there is more noise in the output. The resulting code is very simple, and a lot faster than CRC32, and has the benefit of zero collisions. Had I used someone else's fancier math solution, I'd still be playing with it and learning its faults and features.

For what it's worth, the mathematical exploration of number spaces and information theory can be fascinating when given a concrete objective and a little time to investigate what research is readily available. If you want to learn more about these topics, I suggest you check out this really interesting article. I found others, but this one explained the concepts well and described a new, very simple technique and its relationship to numerous older methods. Here's my quick draft of the 1D case.

// This is the simple LDS described on http://extremelearning.au

public static uint LowDiscrepancySequence(uint v)

{

double a1 = 1.0/1.6180339887498948482;

double r = (0.5 + a1*v);

double frac = r - Math.Truncate(r); // r is a positive number, always

uint result = (uint)(uint.MaxValue * frac); // frac is [0..1)

return result;

}I would also point out that this has 2.7 million collisions with my 150 million inputs set, so I'm sticking with my version. 😊