Ok, sorry for the clickbait. There's no 3 easy steps, but you an absolutely build a cloud at home... if you happen to be the kind of person who buys several servers from a shady figure in the alley behind a colo datacenter in the dead of night, and proceed to install them in your garage in a small rack enclosure. As a purely hypothetical example, I mean.

The object is to drive the servers entirely remotely and let them function as throwaway devices for Kubernetes (eventually), kind of like AWS or GCE. It's taken me a lot more time to figure out than I'm happy admitting, but I'm happy to EILI5 it for you, and save you a ton of time getting the basics from one place.

Further, because I am a huge fan of portable solutions, I wanted the brain of the configuration to live in a Docker container. My rationale here is that once the configuration works, deploying it to more racks or moving the whole system to a larger installation is trivial, as you just copy the config folders and light it up, without going through the effort of reinstalling and reconfiguring everything.

Basic Configuration

- One small server with a local static IP (10.0.0.200 in my case. I am using an older Mac Mini wiped to run Ubuntu 18.04 Desktop, because Ubuntu Server didn't properly detect the network drivers).

- One or more slave servers with IPMI and PXE enabled on one of the network ports. I have some older Dell R620's with plenty of ram, threw away the HDDs and installed a fast SSD on each.

- Ubuntu's Metal-as-a-Service (MAAS) installed inside a Docker container on the master.

- A good quality firewall/gateway running at 10.0.0.1 where you can edit advanced DHCP properties. I have a Ubiquiti Networks Security Gateway, which is pretty slick.

- All the slave machines and the master are on the same subnet (10.0.0.0/24 in my case).

While MaaS is not new, it's pretty snazzy. Most people fail to get it working properly because PXE booting a server requires some parameters passed to them via the initial DHCP request. I, like most, foolishly assumed the IP address was all that mattered, but PXE literally requests a filename and server to request it from via DHCP, so you must be able to supply this from the gateway running DHCP, or you need to set everything up the way MAAS tells you to, where PXE and the master are isolated into their own VLAN or separate subnet. I'm kind of terrible as a network engineer, and just wanted it to work simply. So I figured it out with an external DHCP and one big subnet to rule them all.

MAAS has a Region controller and a Rack controller as separate processes, in case you need multiple racks in a datacenter (region) all controlled from a single place. The Rack controllers register with the Region so it's extensible. This configuration has them both in the same Docker container to make it easy to setup. You can always separate them later, or just add more rack controllers to this config anyway.

IPMI may not be familiar to you if you don't work with real servers. What differentiates servers from typical PCs is you can remotely instrument them via this special network port that is powered on and able to respond even while the server is powered OFF. This is a huge security risk, so there's username/password protecting them, and usually still they are segmented away from the normal network traffic. As I said, I'm lazy, so mine are all on the main network. Once it's working, I'll move them later. See VLAN Tagging if you are into doing it right.

While I did look at all the existing Dockerized MaaS containers out there (I'm not kidding, I tried them all), I didn't find one that really worked right, or that worked simply enough to just launch. So I made one. I'm giving it to you here. Notice, I'm using a docker-compose.yaml rather than a straight Dockerfile because compose handles setting up networks for clusters of processes better than straight Docker, and ideally I'd like to pull Postgres into its own container at some point and thin down the MAAS container. Compose also lets you you change to a macvlan bridge with tagged VLAN subinterfaces with only a few keystrokes, and it automatically creates it for you, fully configured. If that was a mouthful of gobbledygook, ignore it--but if you want your head to spin, you could Google that shit up and learn something clever. Like I said, this config is just a bunch of wires plugged into a switch, for simplicity.

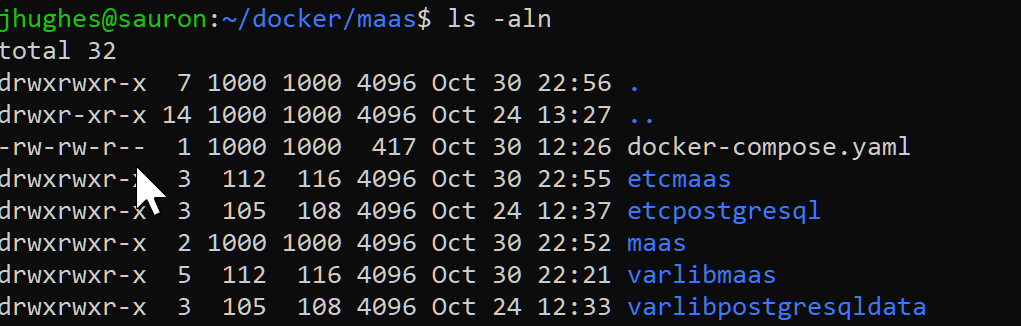

Go to your master server and make yourself a folder someplace to work out of. Here's what the contents of that folder should look like, on a machine that has Docker installed:

Note that the maas folder is going to contain the Dockerfile that builds your image locally. The other four folders are local host folders that will contain the PostgreSQL database, the rather large MaaS OS images that you wouldn't want to re-download every time your Docker machine resets, and the /etc/ configs for maas and postgresql, simply because it's useful to be able to modify them and have that stick. The uid/gid combinations here are critical to get right, too. Just make them empty folders for now.

version: '3.7'

services:

region:

build: ./maas

image: maas:latest

container_name: maas

restart: unless-stopped

privileged: true

ports:

- "5240-5280:5240-5280"

- "69:69/udp"

volumes:

- ./varlibpostgresqldata:/var/lib/postgresql

- ./varlibmaas:/var/lib/maas

- ./etcmaas:/etc/maas

- ./etcpostgresql:/etc/postgresql

network_mode: "host"Knowing the key points in this docker-compose file will save you a lot of headaches. The container is privileged so it can map low port numbers (69, to allow PXE boots to TFTP the boot image), and the network mode is host so it literally exposes all the interfaces to the container, rather than creating a separate networking space isolated from the physical network (required to do machine discovery and IPMI).

And the Dockerfile goes in the maas folder:

FROM ubuntu:18.04

ENV DEBIAN_FRONTEND noninteractive

ENV container docker

# Don't start any optional services except for the few we need.

RUN find /etc/systemd/system \

/lib/systemd/system \

-path '*.wants/*' \

-not -name '*journald*' \

-not -name '*systemd-tmpfiles*' \

-not -name '*systemd-user-sessions*' \

-exec rm \{} \;

# everything else below is to setup maas into the systemd initialized

# container based on ubuntu 18.04

RUN apt-get -y update && \

apt-get -y install software-properties-common && \

add-apt-repository ppa:maas/2.6 && \

apt-get -y update && \

apt-get -y install maas maas-cli rsyslog

# install maas

RUN rsyslogd; apt-get -y install avahi-utils \

dbconfig-pgsql \

iputils-ping \

postgresql \

tcpdump \

python3-pip \

sudo

# potentially used to calculate cidrs

RUN pip3 install netaddr

# initalize systemd

CMD ["/sbin/init"]Just pasting these files and constructing these folder structures will be enough for you to create the image. It takes a while, because it's installing a bunch of stuff into an Ubuntu 18.04 image. It's more like a VM and less like a container, because it's fat. Important: When you first create the docker image, these folders inside the docker container already have data in them. You need this data. First run you will want to do this:

docker-compose build- Edit the docker-compose.yaml file and add a '2' at the end of each, eg.

./etcmaas:/etc/maas2so the volumes won't actually overlap the folders. docker-compose up -ddocker run -it maas /bin/bashenter the container so we can copy out the folder datachown maas:maas /etc/maas2chown maas:maas /var/lib/maas2chown root:root /etc/postgresql2chown postgres:postgres /var/lib/postgresql2

This sets the permissions on the host's folders properly to match those the container wants.cp -Rp /var/lib/maas/* /var/lib/maas2cp -Rp /var/lib/postgresql /var/lib/postgresql2cp -Rp /etc/maas /etc/maas2cp -Rp /etc/postgresql /etc/postgresql2

This copies out all the folder contents properly with permissions set right.- Control-D to exit the container.

- Edit the docker-compose.yaml file to remove the '2' from each of the folders. Kinda sucks Docker doesn't give you a better way to get folder data out of a container more cleanly, but this process does work perfectly.

docker-compose restartto shutdown and pick up the changes to the volume mounts.docker run -it maas /bin/bashto go back into the container. Postgres especially is picky about the uid/gid being right. If anything goes wrong with your changes, you will see errors connecting to the database in the /var/log/syslog file.maas initto configure the MAAS system. It asks for a username and password, which you will use to login to the web controller. Don't sweat it if your public ssh key is rejected, your user/pass is enough.- Control-D to exit the container. You should have a properly running MAAS now.

- One more thing. Critical thing. Really important. Edit the etcmaas/rackd.conf and etcmaas/regiond.conf so that maas_url has your docker host's IP address in it, not localhost, eg.

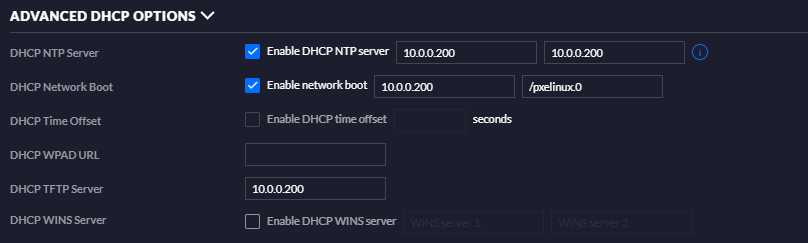

maas_url: http://10.0.0.200:5240/MAAS. If you don't do this, whenever a server tries to power on and report in that it's alive, it will try to talk to localhost and fail to reach the MaaS container. Since we're exposing the 5240 port onto the Docker host's machine for the world to see, that's where it needs to be told to go. - Go configure your DHCP server to tell PXE booters where to get their boot image from. With my Ubiquiti Security Gateway, that section looks like this under Settings->Networks->LAN->DHCP. Yours may look different, or require some research to supply these arguments, but suffice it to say, these are the ones you will need to send with external DHCP packets.

You should be able to go to your master's IP address and the web MAAS port (http://10.0.0.200:5240/) and see the MAAS page now.

Setting Up Slaves

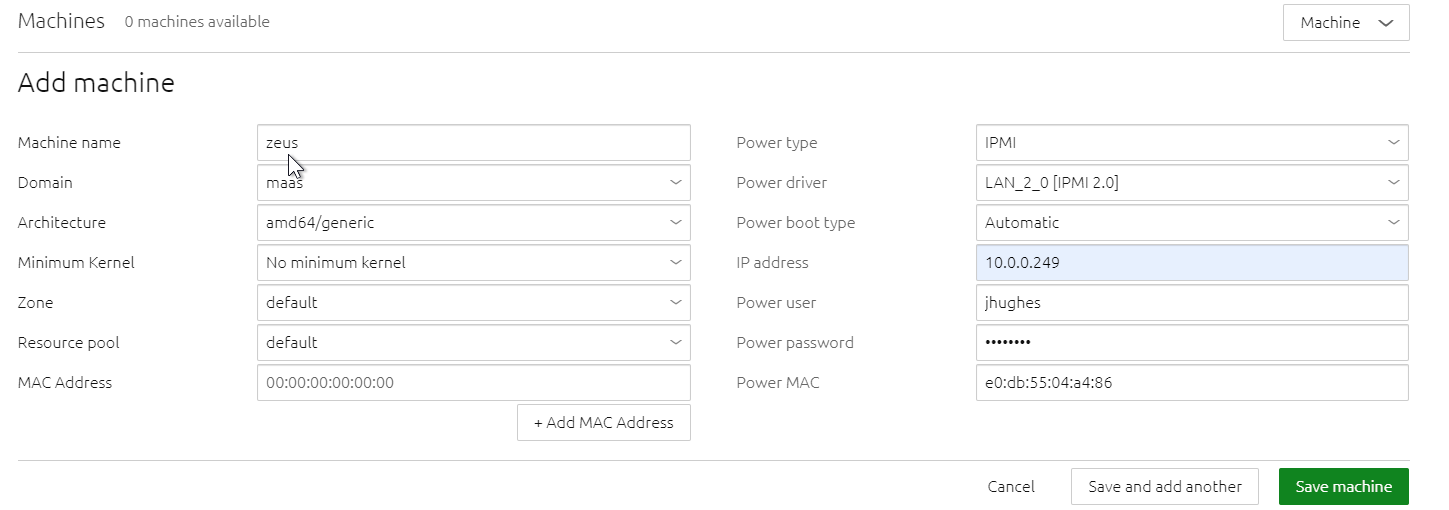

Now that you have MAAS running, and it's on your subnet and able to find devices, the dashboard should start populating with different MAC addresses and maybe some names of machines connected to the network. As far as I can tell, you have to still go in and manually add machines like so:

Note that you have to have a static IP address for the IPMI port so your MAAS server knows how to power up and power down each of the slave machines. Rather than set this on the server, I did this in the DHCP static IP settings on my firewall by detecting the MAC address of my server and set it there. Easier all around, in case I need to jigger the IP addresses for some reason, I don't need to go touch the server itself. It also requires username/password so your raw hardware isn't just exposed to the wind.

Once you have this set, save the machine and it should immediately detect the power status of the box, then start the Commissioning process. MAAS will power up the slave, which if you've configured it to PXE boot properly, induces it to request DHCP packet that has the IP address and filename of the image to download from the MAAS server in it. This boots up, runs a few tests, reports them back to MAAS and you'll see the Commissioning tab populate with data.

Aaaaaand, that's a wrap folks!