Grumblings About MAAS

My last post was all about getting Metal-as-a-Service running. I must have spent a month of free time trying to get that to work. Once I understood all the moving parts, I was so incredibly disappointed that it was made so complicated by MAAS, and is so poorly explained in so many places online. I naturally wanted to do more with less. MAAS requires a lot of active resources (3GB of RAM + some fraction of a CPU) for something that is largely passive, and creating new images to remote boot is only supported with paid subscription. While slick looking and kind of cool for what little it really does, it really pushes you hard towards using Juju. Juju is quite heavy and runs continuously, eating another 3GB of RAM and a CPU or two on your master server. I didn't use it, because my impression is it tries to be both the orchestration of Kubernetes and application bundling of Docker at once. I literally only want to play with K8s and Docker, not find a substitute for it. So I dumped MAAS. I came away with some key learnings:

- IPMI is great for controlling the power state of remote machines.

- PXE booting is how you deliver OS upgrades, or indeed all OS images if you can.

- You have to tweak your DHCP server to hand out the correct TFTP server address for PXE booters to pick up their boot image.

- iPXE is tiny but awesome. It is a little bootloader that speaks HTTP so you can pick up a larger OS payload from a normal website (internal or external).

My Clarified Objective

I want to use a single master server to control a small set of slave servers that do have some storage, but essentially run ephemeral microservices in Docker via Kubernetes and can be shut down gracefully (ideally) at any time. I'd like the slaves to check in with the master when they boot up so they can be added to the control plane easily without manual configuration.

Enter RancherOS

There is a pile of dead technologies out there. Finding live projects with a future is like picking through a civil war battlefield searching for your best friend, hoping he's still alive among the corpses. As far as I could tell, the Rancher is one of the few companies that has a number of very interesting projects going on, and are moving in the right direction. K3OS is one I wish were ready for my needs, but it's a little early and maybe too lightweight, as a Kubernetes-enabled operating system meant for Raspberry Pi style "edge computing". It appears to be an even slimmer version of its big brother, RancherOS, which is a Docker-first operating system that clocks in at ~100mb. That's it. The whole thing. Why? Because it runs almost every process in the entire Linux operating system, except the kernel, in a Docker container. I'm not certain if the first thing it does is download a bunch of Docker images or if they're baked in. In a nutshell, the OS is super-lean and runs all the normal system applications inside a Docker context called System Docker. There is another one called User Docker where microservices and non-OS applications run. It makes me terribly happy to know someone thought to do this, and that it appears to still be in development. There's something wonderful about being able to inspect every process uniformly, detect how it interacts with other processes, and launch or shut down everything in the operating system with the same commands. Amazing!

How about some useful stuff?

Enough jabbering. So it makes sense, 10.0.0.200 is the master server, and 10.0.0.250 is the bare metal box we'll be provisioning. The master has docker installed. That's pretty much it. Well, this isn't a tech post unless there's some bash going on, so let's get to it!

iPXE

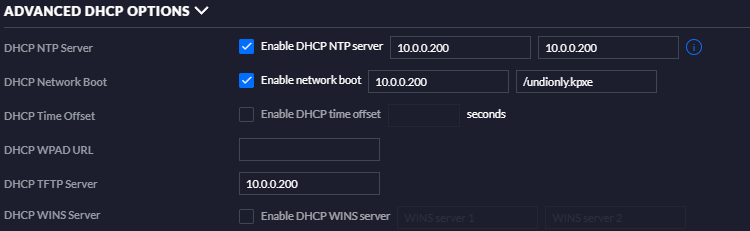

Some prerequisite BIOS tweaks are requried here to get a server working with this config. First, make sure you set your server to boot BIOS not UEFI (it changes what images the bootloader will accept), and make sure the server's regular network card (not the IPMI one) has PXE boot enabled and DHCP enabled, and that NIC is the first natural boot device. You might want a USB thumbdrive or DVD to be the first boot, in case you might need to boot a linux rescue .iso for some reason. Finally, remember to configure your router's DHCP server to have PXE parameters as shown here. This is in my Ubiquiti Security Gateway, so your DHCP server will probably look different, but the features that matter are TFTP server IP, enabling network boot, and setting the filename PXE booters use to be /undionly.kpxe. While mucking about in there, you probably want to assign static IP addresses to your slaves based on their MAC addresses, so they don't move around on you.

The setup for this is really quite simple. I started with the rancheros-pxe-bootstrap repository and cloned it, then tweaked it a bit. It comes with some unnecessary complications, but the gist of it is you need a TFTP server and a Web server, because the TFTP server hands your bare metal box a tiny, custom-configured iPXE boot image that has instructions burned into it on how to reach your Web server once it wakes up. The Web server contains whatever OS image you actually want to boot.

The instructions for iPXE are stored in a file called installer.pxe. My version looks like this:

#!ipxe

# Boot a persistent RancherOS to RAM

# Location of Kernel/Initrd images

#set base-url http://releases.rancher.com/os/latest

set base-url http://10.0.0.200:83

dhcp

kernel ${base-url}/vmlinuz panic=10 rancher.cloud_init.datasources=[url:http://10.0.0.200:83/installer-init.yaml]

initrd ${base-url}/initrd

bootThe base-url is just a convenience variable, but makes it easy to switch between the current latest-and-greatest RancherOS image or whatever I have sitting on my local server on port 83. The instructions here tell iPXE to wait for an IP address from DHCP, then load the kernel over http with a specific set of command line args, and a cloud-config yaml file from a different URL, also hosted on my internal network, finally load the initrd over http and begin the boot process.

Be aware that changing the file alone does not make an iPXE image. You still need to compile it into the image you want to host on TFTP with this clever little docker image command:

docker run -it -v $(pwd):/custom-pxe -e PXE_FILE=installer.pxe vtajzich/ipxeThis command will read the installer.pxe file in the current folder and compile it into undionly.kpxe. Move the compiled .kpxe file to the host folder you bind to the TFTP server, as that's the file each PXE boot will fetch and run.

docker-compose.yaml

---

version: "2"

services:

cloud-init:

image: fnichol/docker-uhttpd

restart: unless-stopped

volumes:

- ./www:/www

ports:

- "83:80/tcp"

container_name: rancheros_www

ipxe:

build:

context: ./ipxe

image: ipxe

restart: unless-stopped

volumes:

- ./srvtftp:/srv/tftp

ports:

- "69:69/udp"

container_name: rancheros_ipxeMy simplified version uses an off-the-shelf microhttp server that simply needs to host a few files. Important note: the TFTP server requires exposing port 69, which cannot be changed, because it's built into the ROM of the PXE hardware. Remember to run docker-compose build once to generate the ipxe docker image. You only need to do this once, as the other files are mounted into the images via host folders.

To run RancherOS, go to https://github.com/rancher/os/releases/ and download initrd and vmlinuz from github and plop them into your ./www folder. The final file is the installer-init.yaml file, also known as a cloud-config. You will spend a LOT of time figuring out what to put in here, and probably customize it to do many many things, but a simple one that gets you basically running looks like this:

#cloud-config

runcmd:

- >

hostn=$(

if [[ `ifconfig|grep B8:CA:3A:5B:9E:D0 | wc -l` =~ '1' ]]; then echo "zeus";

elif [[ `ifconfig|grep 90:B1:1C:52:97:B2 | wc -l` =~ '1' ]]; then echo "hermes";

elif [[ `ifconfig|grep B8:CA:3A:6A:D3:70 | wc -l` =~ '1' ]]; then echo "atlas";

fi) &&

echo $hostn > /etc/hostname &&

hostname $hostn &&

ros config set hostname

ssh_authorized_keys:

- ssh-rsa AAAA..put.your.ssh.public.key.here...==

rancher:

debug: true

network:

interfaces:

eth0:

dhcp: true

docker:

storage_driver: overlay2Note, the very first line must absolutely be #cloud-config or it changes how the file is parsed. And virtually every space and dash is crucial, because it's a yaml file.

Also worth noting is that you can certainly treat your servers more like cattle and less like pets. In my specific circumstance, I fully expect to be digging into screwed up machines and rebooting them often, so I want to set their hostname based on a convention. The easiest and cleanest way to do that is to set a DHCP option called host-name that lets you set the hostname based on the MAC address of the server when it requests an IP address. In my case, the trouble to set this up makes it untenable, so I threw together a quick script that fires at boot time to detect and automatically set the hostname locally before anything else really gets going, and naturally being Linux, you have to do it in three places. Just delete the runcmd block entirely if you don't need it.

IPMI

IPMI has been around a long while and lots of servers you're likely to pick up have it. There are similar systems on different platforms with different interfaces, such as ILO and Redfish. The idea is the same... it's like a miniature computer that is always available even when the server is off, and has its own ethernet jack just to report on the health of the machine. It can also turn on and off the power supplies, completely ignorant of the operating system status. So be careful!

There's an open source tool called ipmitool that gives you complete control over the machine remotely. I don't like there being built packages littered on my servers, so I found there is a great docker image that lets you launch ipmitool directly and execute a single command with it. I count that as an early Christmas gift.

Since IPMI only responds to requests, it does not require any special networking privileges or setup. I split out the IPMI_PASSWORD as a proper environment variable here because it's good practice, but if you're lazy, you can supply it on the command line as -e IPMI_PASSWORD=youripmipw. Just don't tell anyone I said so. Note, you will want to substitute the IP address for the IPMI address of the box you want to control, not its normal IP address... you know, the one that goes dark when the box is turned off? Some amount of digging through the BIOS is required to set up a server initially, such as setting the IPMI IP address, the username and password that can be used to control the machine remotely. Make sure the IP address is blocked out as reserved in your router's DHCP configuration, or you may cause trouble when something else gets a conflicting address by mistake.

# set your password so it doesn't show in process list

export IPMI_PASSWORD=youripmipw

# check power status

docker run -it --rm -e IPMI_PASSWORD kfox1111/ipmitool -E -I lanplus -H 10.0.0.250 -U youripmiuser power status

# power one machine off

docker run -it --rm -e IPMI_PASSWORD kfox1111/ipmitool -E -I lanplus -H 10.0.0.250 -U youripmiuser power off

# power one machine on

docker run -it --rm -e IPMI_PASSWORD kfox1111/ipmitool -E -I lanplus -H 10.0.0.250 -U youripmiuser power on

# reboot one machine

docker run -it --rm -e IPMI_PASSWORD kfox1111/ipmitool -E -I lanplus -H 10.0.0.250 -U youripmiuser power cycleLogin to your brand new RancherOS box!

docker run -it --rm -e IPMI_PASSWORD kfox1111/ipmitool -E -I lanplus -H 10.0.0.250 -U youripmiuser power on

ssh -i /home/youruser/.ssh/privatekey.pem rancher@10.0.0.250Your bare metal machine should boot up, load the iPXE stub, turn around and load the vmlinuz/initrd, and boot RancherOS. All of this will work once configured.

Problems I Ran Into

It wouldn't be right if things went according to plan. The most challenging part of all this wasn't setting up the servers and building the docker images. It was figuring out how to understand the RancherOS documentation for how it was going to interpret the cloud-init file. The turnaround time is about 5 minutes to reboot a server, so it took hours and hours to get results back from my tests. It turns out that two dumb things happened to conspire against me.

- Don't start off using "--" in your kernel parameters. Do that later, once things are working. If you put your cloud-init file after the double-dash, it won't bother looking at it, and none of your SSH keys will get loaded in... or any other configuration changes you make.

- Don't put "tls: true" under a Docker tag in the YAML file unless you know how to configure it properly. That's not sufficient to set up TLS, and it will kill both your

system-dockeranddockerinstances, leaving you with an empty operating system running nothing. - The firmware for the Broadcom NIC that is in one of my servers was removed from the RancherOS distro earlier this year. It wasn't a huge problem to unpack and repack the

initrdto contain my firmware, but it was a stumbling block, and a couple more hours to learn how to do it right. - ....likely a ton more things I blocked out already..

Happy Ranching!